How AI Actually Works: A Deep Dive into the Technology Behind Artificial Intelligence

Introduction

AI is all around us — powering voice assistants, curating social media feeds, driving recommendation engines, and even writing articles like this one. But despite its growing influence, few people understand how AI actually works.

This article unpacks the core mechanisms behind modern AI. We'll break down terms like machine learning, neural networks, training data, and model inference — with just enough technical detail to understand the big picture.

What Is Artificial Intelligence, Really?

At its most basic, Artificial Intelligence refers to systems that mimic aspects of human intelligence — like learning, reasoning, pattern recognition, or decision-making. But AI is a broad umbrella term. What most people encounter today falls under a more specific branch called machine learning (ML).

Machine Learning: The Core of Modern AI

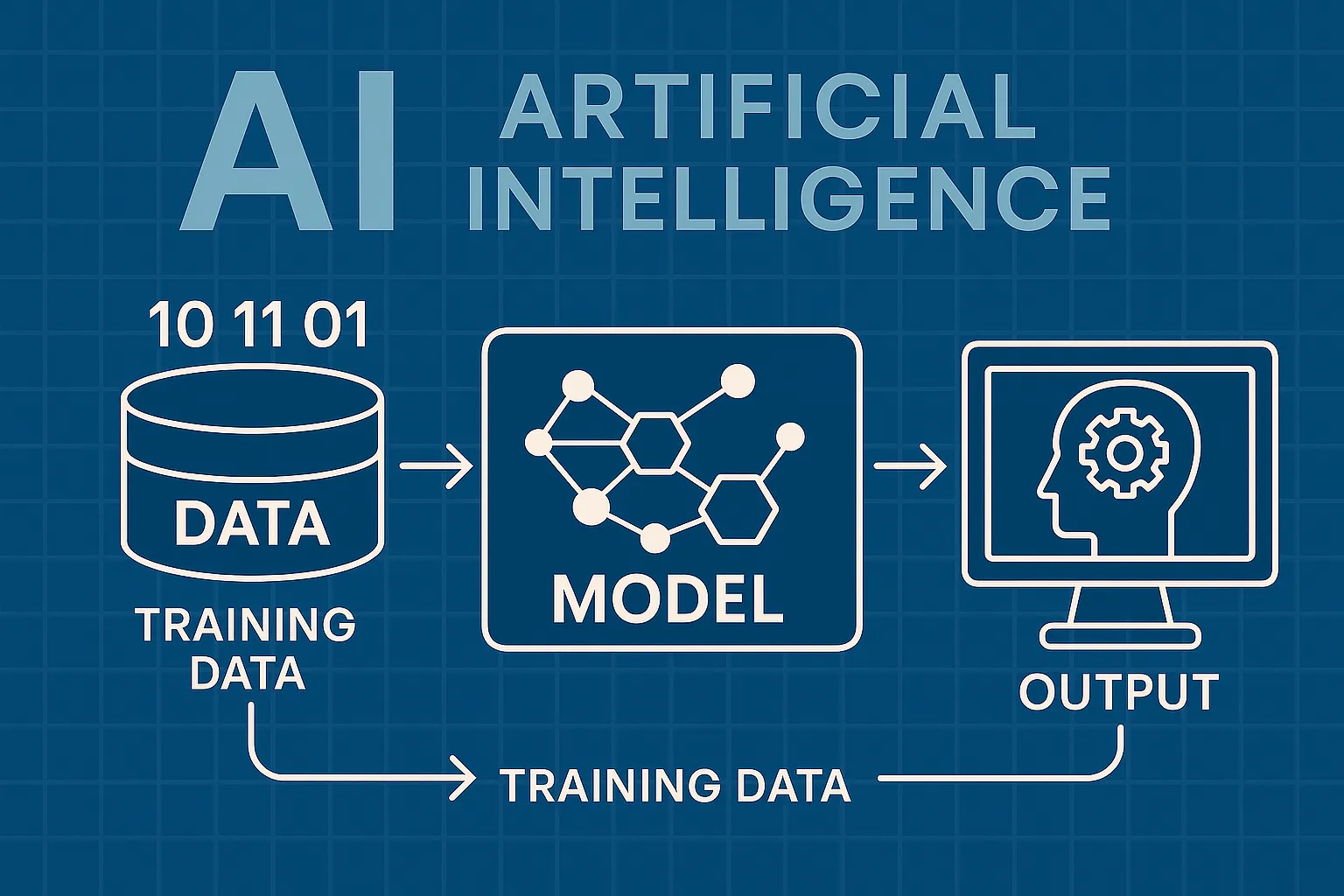

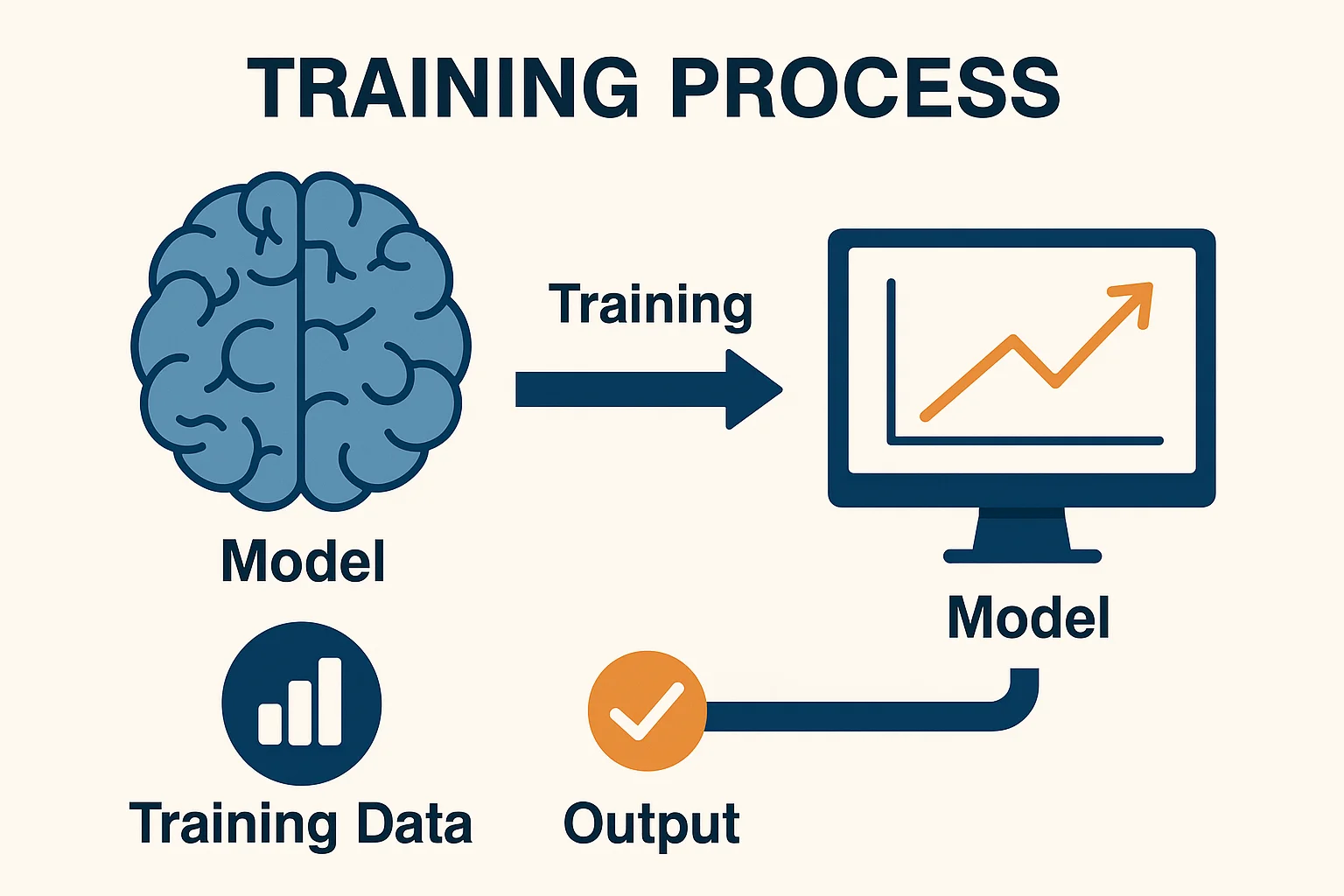

Machine Learning is the process of teaching machines to recognize patterns from data. Rather than explicitly programming rules, ML allows computers to learn those rules by analyzing large amounts of examples.

There are several types of machine learning:

- Supervised learning – The model is trained on labeled data (e.g., spam vs. non-spam emails)

- Unsupervised learning – The model finds structure in unlabeled data (e.g., clustering customer behavior)

- Reinforcement learning – The model learns through trial and error using rewards (e.g., playing a video game)

Neural Networks: The Brains Behind AI

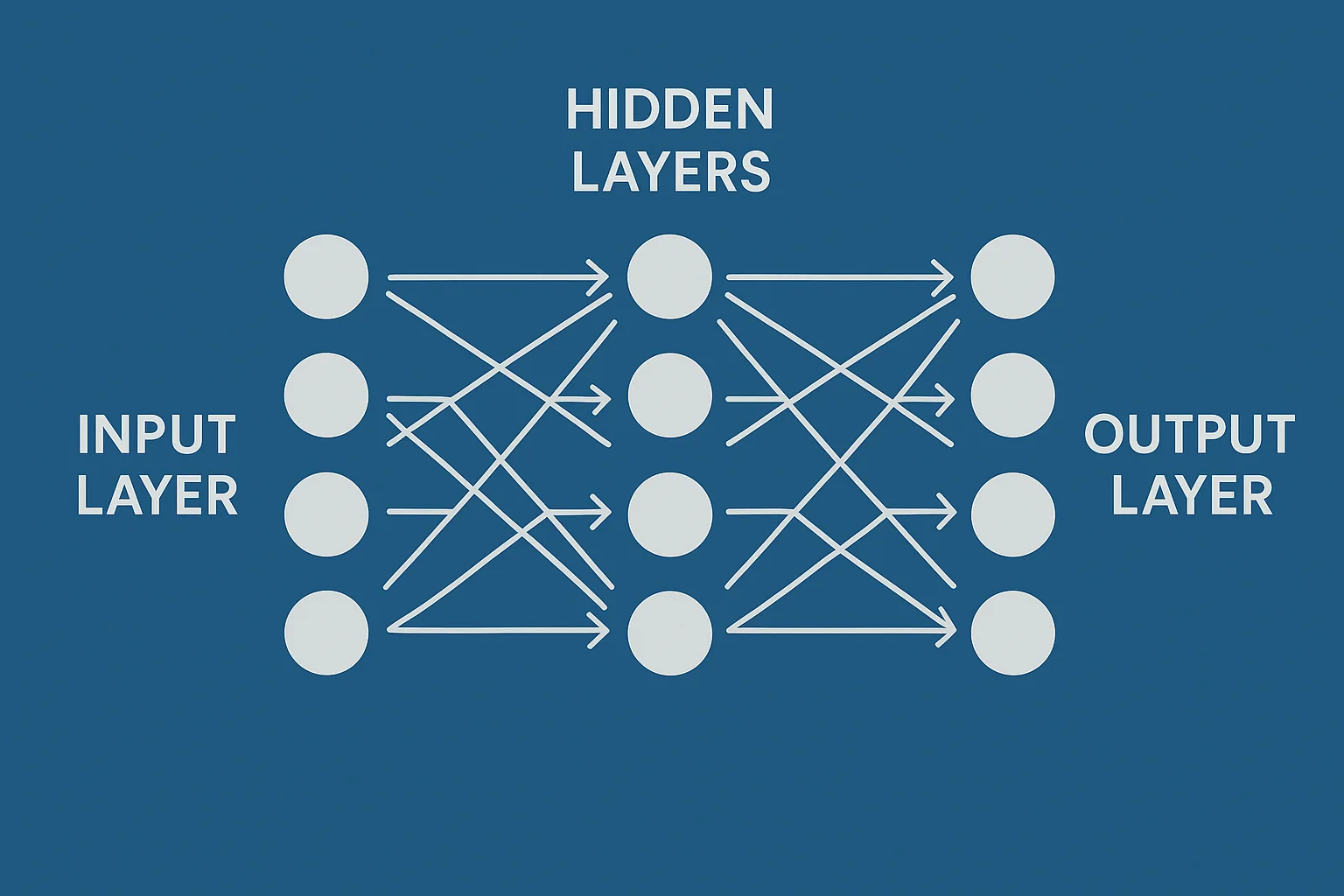

A neural network is a computational model inspired by the structure of the human brain. It consists of layers of interconnected units called neurons, which process input data.

Basic Structure:

- Input Layer: Receives raw data (e.g., pixel values from an image)

- Hidden Layers: Multiple layers that transform and abstract the data

- Output Layer: Produces the final prediction (e.g., cat or dog)

Each connection between neurons has a weight, and neurons apply a mathematical function (like ReLU or sigmoid) to their inputs. During training, these weights are adjusted to minimize prediction errors.

Training an AI Model: Step-by-Step

- Data Collection

AI needs data — often lots of it. For image classification, this might be millions of labeled pictures. For language models, it's vast text corpora scraped from the internet. - Preprocessing

Data must be cleaned, formatted, and normalized. For example, text is tokenized into words or subwords. Images may be resized or converted to grayscale. - Model Initialization

A neural network is set up with randomly assigned weights. - Forward Propagation

Input data is fed through the network to produce an output (prediction). - Loss Function

A function calculates how far off the prediction is from the actual label. - Backward Propagation

The error is propagated backward through the network, adjusting weights using an algorithm like gradient descent. - Iteration

This cycle repeats across the training data over thousands of steps (epochs), gradually improving accuracy.

Deep Learning and Transformers

Deep Learning refers to neural networks with many layers (hence “deep”). These models can learn extremely complex patterns and have powered major breakthroughs in AI.

A key innovation in recent years is the Transformer architecture, which underlies large language models like GPT (Generative Pretrained Transformer).

Transformers use:

- Self-attention: Each word in a sentence considers the importance of every other word, capturing context better than older models like RNNs or LSTMs.

- Parallel processing Unlike older models that process sequences one step at a time, transformers process input all at once — speeding up training.

Large Language Models (LLMs)

LLMs like GPT-4 are trained on massive amounts of text data. They don’t “understand” language like humans — instead, they learn statistical patterns in text and generate outputs by predicting the next likely word.

Key traits of LLMs:

- Pretrained on general internet data

- Fine-tuned for specific tasks (e.g., customer service, summarization)

- Token-based generation: Outputs are generated one token at a time, influenced by probabilities

Model Evaluation and Accuracy

Once trained, models are evaluated using metrics like:

- Accuracy – percentage of correct predictions

- Precision/Recall – especially for imbalanced data

- F1 score – harmonic mean of precision and recall

- Loss – average prediction error

A separate validation dataset (not seen during training) is used to ensure the model isn’t just memorizing data — a problem known as overfitting.

Deployment and Inference

Once trained, models are deployed to production — this is when they start making predictions on new, real-world data. This step is called inference.

Real-time inference (like voice assistants) requires optimized models and infrastructure that can respond quickly and accurately under hardware constraints.

Limitations of AI Today

Despite the hype, AI has many limitations:

- Lack of understanding: Models don’t comprehend, they correlate

- Bias and fairness: Training data can include social and cultural biases

- Data hunger: Modern models require huge datasets and compute resources

- Lack of general intelligence: Current AI is narrow — good at one task at a time

The Role of Data and Compute

Training modern AI models requires:

Ethics and Safety in AI

As AI capabilities grow, so do ethical challenges:

- Bias: AI systems may amplify social inequalities

- Privacy: LLMs may memorize sensitive info

- Misinformation: Tools like deepfakes and AI-generated articles can be abused

- Alignment: How do we ensure AI does what we want it to do?

Ongoing work in AI alignment, interpretability, and governance seeks to address these issues.

Conclusion

AI is not magic — it’s math, models, and massive amounts of data. Understanding how AI works demystifies the technology and helps us think critically about its role in society.

From simple linear regressions to billion-parameter transformers, AI systems rely on logical foundations and statistical techniques — not intuition or consciousness. As these tools become more powerful, knowing what’s under the hood empowers us to use them responsibly, ethically, and effectively.